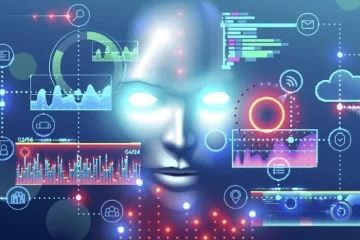

Artificial intelligence (AI) is getting more strong and intelligent. This is resulting in an increasing number of AI applications, ranging from healthcare to transportation to industry. However, with great power comes great responsibility. It is critical to guarantee that AI systems be utilized in a safe, ethical, and responsible manner.

The activity of administering and regulating AI systems is known as AI capability control. It entails establishing boundaries, limits, and standards to guarantee that AI functions safely, ethically, and responsibly.

There are many techniques for controlling AI capacity. Among the most frequent approaches are:

Ethical principles and standards

These are criteria that specify what conduct is acceptable and unacceptable for AI systems. The Partnership on AI (PAI) AI Principles for Responsible Development, for example, stipulate that AI systems should be created and utilized in a manner that respects human rights, reduces damage, and promotes justice.

Technical restrictions

Technical constraints are procedures that may be used to limit AI systems’ capabilities. AI systems, for example, may be trained to only access certain data or to complete specific tasks.

Transparency and accountability

Transparency and accountability are two steps that may be done to guarantee that AI systems are understood and that their creators be held responsible for their activities. AI systems, for example, may be obliged to explain their judgments in a form that humans can comprehend.

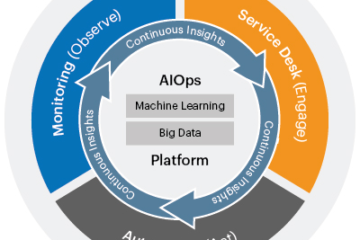

Auditing and monitoring

Auditing and monitoring are techniques that may be used to discover and manage possible AI system faults. AI systems, for example, may be inspected on a regular basis to verify that they are functioning as intended.

The optimal technique to controlling AI capacity will differ based on the application. All businesses that build or utilize AI systems, on the other hand, should take precautions to guarantee that these systems are safe, ethical, and accountable.

The Rise of Robust AI Tools

The emergence of strong AI tools such as ChatGPT and Midjourney has raised worries about the possible hazards and unexpected effects of these systems. ChatGPT, for example, has been used to produce false news stories and social media postings, while Midjourney has been used to generate realistic photos of non-existent individuals and things.

These worries have prompted corporations to seek strategies to maintain some control over AI systems. One method is to use AI capability control. Organizations may assist to avoid risks and ensure that AI systems are utilized responsibly by establishing limits, constraints, and standards for AI systems.

AI Capability Control in the Future

The requirement for AI capacity control will become more crucial as AI develops. Organizations will need to develop new and inventive methods of managing and regulating these powerful systems.

The creation of AI safety measures is one potential field of study. These strategies strive to guarantee that AI systems are in line with human ideals and do not endanger mankind.

Another area of study is the creation of AI governance frameworks. These frameworks would provide a set of rules and regulations for the development and use of artificial intelligence.

The future of AI capacity control remains unknown. However, it is obvious that this is a critical problem that must be addressed. Organizations may contribute to guarantee that AI systems are utilized for good rather than harm by taking efforts to manage them.

Conclusion

Controlling AI capabilities is critical to ensure that AI is utilized safely, ethically, and responsibly. Organizations may assist to avoid risks and ensure that AI systems are utilized for good by establishing limits, constraints, and standards for AI systems.

The requirement for AI capacity control will become more crucial as AI develops. Organizations will need to develop new and inventive methods of managing and regulating these powerful systems.