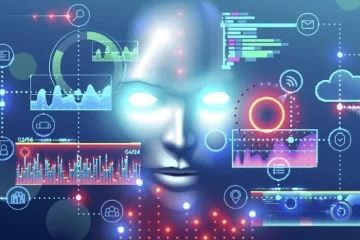

The topic of AI Content Detection is a burgeoning area that is seeing significant growth. AI content detectors refer to software systems that use artificial intelligence techniques to discern and classify text that has been produced by extensive language models (LLMs). LLMs, or Language Models, are a category of artificial intelligence systems that have the capability to create and comprehend human language. They find use in several domains, including machine translation, text summarization, and creative writing.

The proliferation of AI content detection systems is propelled by many contributing causes, which encompass:

The increasing use of LLMs

The growing prevalence of LLMs: LLMs are seeing a notable surge in their functionality and proficiency, leading to their expanded use across a diverse array of applications. The rise of LLMs has prompted increasing apprehension over their possible use in the dissemination of fabricated news and several other types of misleading material.

The demand for academic integrity

There is a growing trend among students and freelance writers to use LLMs as a means to produce their assignments and articles, hence raising concerns about academic integrity. The aforementioned phenomenon has resulted in an increasing need for solutions that can effectively identify and mitigate instances of plagiarism.

The need to protect businesses from fraud

The need to safeguard enterprises from fraudulent activities: Enterprises are progressively using LLMs (Language Model Models) to provide material for their promotional and advertising initiatives. Nevertheless, it is important to acknowledge the potential hazard associated with the utilization of this material for deceptive intentions, such as fabricating counterfeit evaluations of products or producing unsolicited electronic communications.

Artificial intelligence (AI) content detectors have the potential to effectively tackle the aforementioned difficulties. The use of AI content detectors plays a crucial role in mitigating the dissemination of disinformation, safeguarding the integrity of scholarly discourse, and shielding enterprises from fraudulent activities.

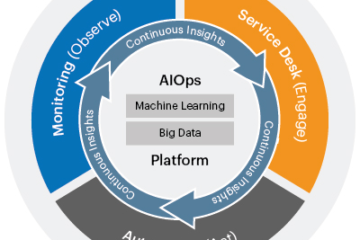

AI Content Detectors as Part of AI Capability Control

The proliferation of AI content detectors is indicative of a broader phenomenon referred to as AI capability control. AI capacity control pertains to the governance and oversight of artificial intelligence systems. The approach in question entails the establishment of limits, constraints, and rules to guarantee the safe, ethical, and responsible use of artificial intelligence (AI).

The proliferation of robust generative artificial intelligence (AI) applications such as ChatGPT, Midjourney, and Dall-E has engendered mounting apprehension over the prospective hazards and inadvertent ramifications associated with these systems. One potential use of generative AI systems is the creation of deepfakes, which refer to modified films or audio recordings designed to falsely depict individuals engaging in actions or uttering statements they did not really do or produce. The use of deepfakes has the potential to disseminate false information, tarnish individuals’ reputations, and perhaps disrupt electoral processes.

The increasing apprehension over the possible hazards associated with generative AI has played a significant role in the emergence of a movement opposing the advancement of AI technology. The frequency of searches pertaining to “anti-AI” has shown a notable surge of 214% throughout the preceding 12-month period. The anti-artificial intelligence (AI) movement advocates for the implementation of software and regulations that aim to regulate and govern AI technologies.

AI content detectors are a prominent illustration of an AI capacity control mechanism. AI-based content detection systems have the potential to effectively identify and flag material that is created by AI. This capability holds promise in mitigating the dissemination of disinformation and other types of detrimental content.

The growing need for AI-based content detection systems is driven by several variables:

- The emergence of AI-generated content: The development of advanced AI language models like as GPT-3 and Bard has facilitated the production of text that closely resembles human-generated material, hence simplifying the process of generating realistic writing. The consequence of this phenomenon has resulted in a widespread occurrence of AI-generated material, including both authentic and fraudulent instances.

- The need to protect intellectual property: AI-generated material, has the capacity to facilitate plagiarism of pre-existing works, hence posing detrimental consequences to the reputation and livelihood of content providers. Artificial intelligence (AI) content detection systems have the capability to accurately identify and report instances of copied material, therefore safeguarding the intellectual property rights of content providers.

- The need to combat misinformation and disinformation: The use of AI-generated material may also serve as a means to develop and disseminate misleading or false information. Artificial intelligence (AI) content detection systems have the potential to effectively identify and highlight misleading information, thus contributing to the safeguarding of the general public.

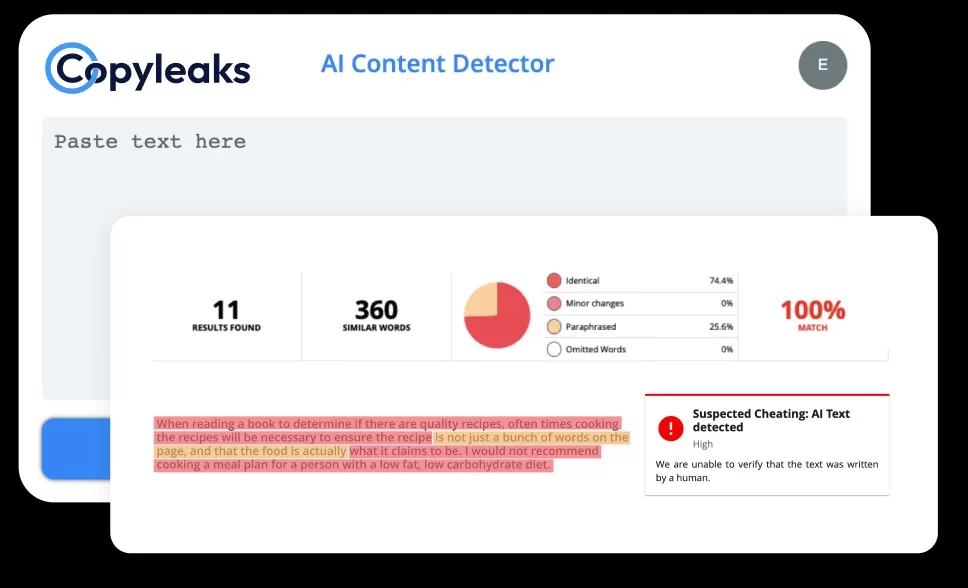

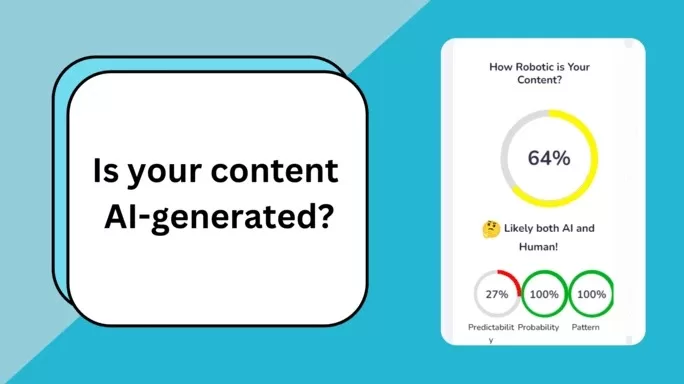

CopyLeaks and Crossplag are two startups that have introduced artificial intelligence-based tools for detecting content plagiarism. Both startups have secured substantial financial backing and are seeing increasing success in the market.

The incorporation of artificial intelligence content detection capabilities has been implemented by Turnitin, a prominent platform designed to combat plagiarism. This observation indicates that even well-established participants within the anti-plagiarism industry are recognizing the need to use artificial intelligence for content detection purposes.

In general, there is a significant increase in the need for artificial intelligence (AI) content detectors. Both startups and established companies are allocating resources to invest in this sector, and it is anticipated that the market will sustain its growth trajectory in the foreseeable future.

Below are some supplementary insights on the need for artificial intelligence (AI) content detectors:

- The increasing use of AI content in the workplace: Most companies utilize AI-generated material for marketing purposes like blogs amongst others. However such content can be produced at an immediate pace and more so in large quantities to cater to such demands. Yet this implies the necessity to guarantee that generated by AI content is unique as well as accurate.

- The growing popularity of social media: Many individuals get their news and information through social media sites. Nonetheless, Social media has an equal potential to be used as a source of lies or half-truths. These tools such as AI-driven falsehood detectors may serve to determine and caution about fake news in social media.

- The rise of deepfakes: The term deepfake refers to a video, an audio recording whose manipulation makes it seem like an individual says or does exactly what he never said or did in real life They can also propagate lies and falsehoods or wreck somebody’s reputation through deepfakes. There are also ways of identifying and flagging deep fakes through the use of artificial intelligence content detectors.

The market for AI content detectors is seeing significant growth because of increasing awareness among companies, social media platforms, and the general public over the potential hazards associated with AI-generated material, misinformation, and disinformation.

The Future of AI Content Detection

The domain of AI content identification is still in its nascent phase of advancement, although it is seeing exponential growth. As artificial intelligence (AI) models continue to advance in complexity and proficiency, the effectiveness and dependability of AI content detectors are expected to improve.

Therefore, we anticipate seeing more applications for AI content detectors in the future. For example, AI content detectors could be used to:

- Monitor social media platforms for misinformation and disinformation.

- Screen job applications for plagiarism.

- Detect fake news articles.

- Identify AI-generated content in academic papers.

- Flag AI-generated content that is being used for fraudulent purposes.

The Ethics of Developing AI Content Detection Technology Deserve Public Discussion. For instance, we need to think about whether it is possible to protect the right of individuals to privacy and freedom of expression while preventing harm to society caused by the dissemination of fake information.

We must also remain cognizant that AI-based content detection systems can be misused by wrongdoers. Such a technology could for instance be used to block dissenting voices and marginalize particular sections of society.

In conclusion, AI content detection is an emerging technology poised to influence the larger society. Such technology should be used in a responsible and respectable manner.